Back in July, I used MirageOS to create my first unikernel, a simple REST service for queuing file uploads, deployable as a virtual machine. While a traditional VM would be a complete Linux system (kernel, init system, package manager, shell, etc), a Mirage unikernel is a single OCaml program which pulls in just the features (network driver, TCP stack, web server, etc) it needs as libraries. Now it's time to look at securing the system with HTTPS and access controls, ready for deployment.

Table of Contents

( this post also appeared on Hacker News and Reddit )

Introduction

As a quick reminder, the service ("Incoming queue") accepts uploads from various contributors and queues them until the (firewalled) repository software downloads them, checks the GPG signatures, and merges them into the public software repository, signed with the repository's key:

Although the queue service isn't security critical, since the GPG signatures are made and checked elsewhere, I would like to ensure it has a few properties:

- Only the repository can fetch items from the queue.

- Only authorised users can upload to it.

- I can see where an upload came from and revoke access if necessary.

- An attacker cannot take control of the system and use it to attack other systems.

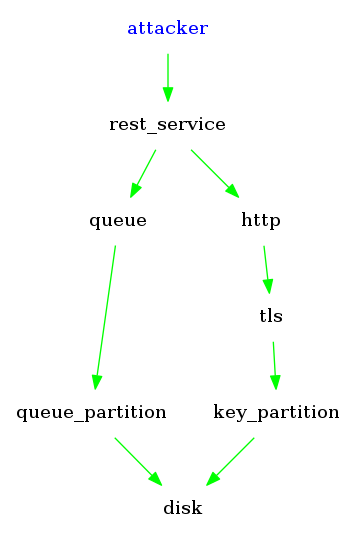

We often think of security as a set of things we want to prevent - taking away possible actions from a fundamentally vulnerable underlying system (such as my original implementation, which had no security features). But ideally I'd like every component of the system to be isolated by default, with allowed interactions (shown here by arrows) specified explicitly. We should then be able to argue (informally) that the system will meet the goals above without having to verify that every line of code is correct.

My unikernel is written in OCaml and runs as a guest OS under the Xen hypervisor, so let's look at how well those technologies support isolation first...

OCaml

I want to isolate the components of my unikernel, giving each just the access it requires. When writing an OS, some unsafe code will occasionally be needed, but it should be clear which components use unsafe features (so they can be audited more carefully), and unsafe features shouldn't be needed often.

For example, the code for handling an HTTP upload request should only be able to use our on-disk queue's Uploader interface and its own HTTP connection. Then we would know that an attacker with upload permission can only cause new items to be added to the queue, no matter how buggy that code is. It should not be able to read the web server's private key, establish new out-bound connections, corrupt the disk, etc.

Like most modern languages, OCaml is memory-safe, so components can't interfere with each other through buggy pointer arithmetic or unsafe casts of the kind commonly found in C code.

But we also need to avoid global variables, which would allow two components to communicate without us explicitly connecting them. I can't reason about the security of the system by looking at arrows in the architecture diagrams if unconnected components can magically create new arrows by themselves! I've seen a few interesting approaches to this problem (please correct me if I've got this wrong):

- Haskell

- Haskell avoids all side-effects, which makes global variables impossible (without using "unsafe" features), since updating them would be a side-effect.

- Rust

- Rust almost avoids the problem of globals through its ownership types. Since only one thread can have a pointer to a mutable value at a time, mutable values can't normally be global. Rust does allow "mutable statics" (which contain only pointers to fixed data), but requires an explicit "unsafe" block to use them, which is good.

- E

- E allows modules to declare top-level variables, but each time the module is imported it creates a fresh copy.

OCaml does allow global variables, but by convention they are generally not used.

A second problem is controlling access to the outside world, including the network and disks (which you could consider to be more global variables):

- Haskell

-

Haskell doesn't allow functions to access the outside world at all, but they can return an

IOtype if they want to do something (the caller must then pass this value up to the top level). This makes it easy to see that e.g. evaluating a function "uriPath :: URI -> String" cannot access the network. However, it appears that all IO gets lumped in together: a value of typeIO Stringmay cause any side-effects at all (disk, network, etc), so the entire side-effecting part of the program needs to be audited. - Rust

- Rust allows all code full access to the system via its standard library. For example, any code can read or write any file.

- E

-

E passes all access to the outside world to the program's entry point.

For example,

<file>grants access to the file system and<unsafe>grants access to all unsafe features. These can be passed to libraries to grant them access, and can be attenuated (wrapped) to provide limited access.

For example:

1 2 | |

Here, queue has read-write access to the /var/myprog/queue sub-tree (and nothing else).

It also has no way to share data with any other parts of the program, including other queues.

Like Rust, OCaml does not limit access to the outside world.

However, Mirage itself uses E-style dependency injection everywhere, with the unikernel's start function being passed all external resources as arguments:

1 2 3 4 5 6 7 8 9 10 | |

Because everything in Mirage is defined using abstract types, libraries always expect to be passed the things they need explicitly.

We know that Upload_queue above won't access a block device directly because it needs to support different kinds of block device.

OCaml does enforce its abstractions.

There's no way for the Upload_queue to discover that block is really a Xen block device with some extra functionality

(as a Java program might do with if (block instanceof XenBlock), for example).

This means that we can reason about the limits of what functions may do by looking only at their type signatures.

The use of functors means you can attenuate access as desired.

For example, if we want to grant just part of block to the queue then we can create our own module implementing the BLOCK type that exposes just some partition of the device, and pass that to the Upload_queue.Make functor.

In summary then, we can reason about security fairly well in Mirage if we assume the libraries are not malicious, but we cannot make hard guarantees. It should be possible to check with automatic static analysis that we're not using any "unsafe" features such as global variables, direct access to devices, or allocating uninitialised memory, but I don't know of any tools to do that (except Emily, but that seems to be just a proof-of-concept). But these issues are minor: any reasonably safe modern language will be a huge improvement over legacy C or C++ systems!

Xen

The Xen hypervisor allows multiple guest operating systems to run on a single physical machine. It is used by many cloud hosting providers, including Amazon's AWS. I run it on my CubieTruck - a small ARM board. Xen allows me to run my unikernel on the same machine as other services, but ideally with the same security properties as if it had its own dedicated machine. If some other guest on the machine is compromised, it shouldn't affect my unikernel, and if the unikernel is compromised then it shouldn't affect other guests.

A typical Xen deployment, running four domains.

A typical Xen deployment, running four domains.

The diagram above shows a deployment with Linux and Mirage guests. Only dom0 has access to the physical hardware; the other guests only see virtual devices, provided by dom0.

How secure is Xen? The Xen security advisories page shows that there are about 3 new Xen advisories each month. However, it's hard to compare programs this way because the number of vulnerabilities reported depends greatly on the number of people using the program, whether they use it for security-critical tasks, and how seriously the project takes problems (e.g. whether a denial-of-service attack is considered a security bug).

I started using Xen in April 2014. These are the security problems I've found myself so far:

- XSA-93 Hardware features unintentionally exposed to guests on ARM

- While trying to get Mini-OS to boot, I tried implementing ARM's recommended boot code for invalidating the cache (at startup, you should invalidate the cache, dropping any previous contents). When running under a hypervisor this should be a null-op since the cache is always valid by the time a guest is running, but Xen allowed the operation to go ahead, discarding pending writes by the hypervisor and other guests and crashing the system. This could probably be used for a successful attack.

- XSA-94 : Hypervisor crash setting up GIC on arm32

- I tried to program an out-of-range register, causing the hypervisor to dereference a null pointer and panic. This is just a denial of service (the host machine needs to be rebooted); it shouldn't allow access to other VMs.

- XSA-95 : Input handling vulnerabilities loading guest kernel on ARM

-

I got the length wrong when creating the zImage for the unikernel (I included the .bss section in the length).

The

xl createtool didn't notice and tried to read the extra data, causing the tool to segfault. You could use this to read a bit of private data from thexlprocess, but it's unlikely there would be anything useful there.

Although that's more bugs than you might expect, note that they're all specific to the relatively new ARM support. The second and third are both due to using C, and would have been avoided in a safer language. I'm not really sure why the "xl" tool needs to be in C - that seems to be asking for trouble.

To drive the physical hardware, Xen runs the first guest (dom0) with access to everything. This is usually Linux, and I had various problems with that. For example:

- Bug sharing the same page twice

- Linux got confused when the unikernel shared the same page twice (it split a single page of RAM into multiple TCP segments). This wasn't a security bug (I think), but after it was fixed, I then got:

- Page still granted

- If my unikernel sent a network packet and then exited quickly, dom0 would get stuck and I'd have to reboot.

- Oops if network is used too quickly

- The Linux dom0 initialises the virtual network device while the VM is booting. My unikernel booted fast enough to send packets before the device structure had been fully filled in, leading to an oops.

- Linux Dom0 oops and reboot on indirect block requests

-

Sending lots of block device requests to Linux dom0 from the unikernel would cause Linux to oops in

swiotlb_tbl_unmap_singleand reboot the host. I wasn't the first to find this though, and backporting the patch from Linux 3.17 seemed to fix it (I don't actually know what the problem was).

So it might seem that using Xen doesn't get us very far. We're still running Linux in dom0, and it still has full access to the machine. For example, a malicious network packet from outside or from a guest might still give an attacker full control of the machine. Why not just use KVM and run the guests under Linux directly?

The big (potential) advantage of Xen here is Dom0 Disaggregation. With this, Dom0 gives control of different pieces of physical hardware to different VMs rather than driving them itself. For example, Qubes (a security-focused desktop OS using Xen) runs a separate "NetVM" Linux guest just to handle the network device. This is connected only to the FirewallVM - another Linux guest that just routes packets to other VMs.

This is interesting for two reasons. First, if an attacker exploits a bug in the network device driver, they're still outside your firewall. Secondly, it provides a credible path to replacing parts of Linux with alternative implementations, possibly written in safer languages. You could, for example, have Linux running dom0 but use FreeBSD to drive the network card, Mirage to provide the firewall, and OpenBSD to handle USB.

Finally, it's worth noting that Mirage is not tied to Xen, but can target various systems (mainly Unix and Xen currently, but there is some JavaScript support too). If it turns out that e.g. Genode on seL4 (a formally verified microkernel) provides better security, we should be able to support that too.

Transport Layer Security

We won't get far securing the system while attackers can read and modify our communications. The ocaml-tls project provides an OCaml implementation of TLS (Transport Layer Security), and in September Hannes Mehnert showed it running on Mirage/Xen/ARM devices. Given the various flaws exposed recently in popular C TLS libraries, an OCaml implementation is very welcome. Getting the Xen support in a state where it could be widely used took a bit of work, but I've submitted all the patches I made, so it should be easier for other people now - see https://github.com/mirage/mirage-dev/pull/52.

C stubs for Xen

TLS needs some C code for the low-level cryptographic functions, which have to be constant time to avoid leaking information about the key, so first I had to make packages providing versions of libgmp, ctypes, zarith and nocrypto compiled to run in kernel mode.

The reason you need to compile C programs specially to run in kernel mode is because on x86 processors user mode code can assume the existence of a red zone, which allows some optimisations that aren't safe in kernel mode.

Ethernet frame alignment

The Mirage network driver sends Ethernet frames to dom0 by sharing pages of memory. Each frame must therefore be contained in a single page. The TLS code was (correctly) passing a large buffer to the TCP layer, which incorrectly asked the network device to send each TCP-sized chunk of it. Chunks overlapping page boundaries then got rejected.

My previous experiments with tracing the network layer had shown that we actually share two pages for each packet: one for the IP header and one for the payload. Doing this avoids the need to copy the data to a new buffer, but adds the overhead of granting and revoking access to both pages. I modified the network driver to copy the data into a single block inside a single page and got a large speed boost. Indeed, it got so much faster that it triggered a bug handling full transmit buffers - which made it initially appear slower!

In addition to fixing the alignment problem when using TLS, and being faster, this has a nice security benefit: the only data shared with the network driver domain is data explicitly sent to it. Before, we had to share the entire page of memory containing the application's buffer, and there was no way to know what else might have been there. This offers some protection if the network driver domain is compromised.

HTTP API

My original code configured a plain HTTP server on port 8080 like this:

1 2 3 4 5 6 7 8 9 | |

stack creates TCP/IP stack.

conduit_direct can dynamically select different transports (e.g. http or vchan).

http_server applies the configuration to the conduit to get an HTTP server using plain HTTP.

I added support to Conduit_mirage to let it wrap any underlying conduit with TLS.

However, the configuration needed for TLS is fairly complicated, and involves a secret key which must be protected.

Therefore, I switched to creating only the conduit in config.ml and having the unikernel itself load the key and certificate by copying a local "keys" directory into the unikernel image as a "crunch" filesystem:

1 2 3 4 5 6 7 8 | |

1 2 3 4 5 6 7 8 | |

This runs a secure HTTPS server on port 8443. The rest of the code is as before.

The private key

The next question is where to store the real private key. The examples provided by the TLS package compile it into the unikernel image using crunch, but it's common to keep unikernel binaries in Git repositories and people don't expect kernel images to contain secrets. In a traditional Linux system, we'd store the private key on the disk, so I decided to try the same here. (I did think about storing the key encrypted in the unikernel and storing the password on the disk so you'd need both to get the key, but the TLS library doesn't support encrypted keys yet.)

I don't use a regular filesystem for my queuing service, and I wouldn't want to share it with the key if I did, so instead I reserved a separate 4KB partition of the disk for the key.

It turned out that Mirage already has partitioning support in the form of the ocaml-mbr library.

I didn't actually create an MBR at the start, but just used the Mbr_partition functor to wrap the underlying block device into two parts.

The configuration looks like this:

How safe is this?

I don't want to audit all the code for handling the queue, and I shouldn't have to: we can see from the diagram that the only components with access to the key are the disk, the partitions and the TLS library.

We need to trust that the TLS library will protect the key (not easy, but that's its job) and that queue_partition won't let queue access the part of the disk with the key.

We also need to trust the disk, but if the partitions are only allowing correct requests through, that shouldn't be too much to ask.

The partition code

Before relying on the partition code, we'd better take a look at it because it may not be designed to enforce security. Indeed, a quick look at the code shows that it isn't:

1 2 3 4 5 6 7 8 9 10 11 | |

It checks only that the requested start sector plus the length of the results buffer is less than the length of the partition. To (hopefully) make this bullet-proof, I:

- moved the checks into a single function so we don't have to check two copies,

- added a check that the start sector isn't negative,

- modified the end check to avoid integer overflow, and

- added some unit-tests.

1 2 3 4 5 6 7 8 9 10 | |

The need to protect against overflow is an annoyance.

OCaml's Int64.(add max_int one) doesn't abort, but returns Int64.min_int.

That's disappointing, but not surprising.

I wrote a unit-test that tried to read sector Int64.max_int and ran it (before updating the code) to check it detected the problem.

I was expecting the partition code to pass the request to the underlying block device, which I expected to return an error about the invalid sector, but it didn't!

It turns out, Int64.to_int (used by my in-memory test block device) silently truncates out-of-range integers:

1 2 3 4 5 6 7 8 | |

So, if the queue can be tricked into asking for sector 9223372036854775807 then the partition would accept it as valid and the block device would truncate it and give access to sector 0 - the sector with the private key!

Still, this is a nice demonstration of how we can add security in Mirage by inserting a new module (Mbr_partition) between two existing ones.

Rather than having some complicated fixed policy language (e.g. SELinux), we can build whatever security abstractions we like.

Here I just limited which parts of the disk the queue could access, but we could do many other things: make a partition read-only, make it readable only until the unikernel finishes initialising, apply rate-limiting on reads, etc.

Here's the final code. It:

- Takes a block device, a conduit, and a KV store as inputs.

- Creates two partitions (views) onto the block device.

- Creates a queue on one partition.

- Reads the private key from the other, and the certificate from the KV store.

- Begins serving HTTPS requests.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | |

Entropy

Another issue is getting good random numbers, which is required for the cryptography. On start-up, the unikernel displayed:

Entropy_xen_weak: using a weak entropy source seeded only from time.

To fix this, you need to use Dave Scott's version (with a slight patch from me):

opam pin add mirage-entropy-xen 'https://github.com/talex5/mirage-entropy.git#handshake'

You should then see:

Entropy_xen: attempting to connect to Xen entropy source org.openmirage.entropy.1

Console.connect org.openmirage.entropy.1: doesn't currently exist, waiting for hotplug

Now run xentropyd in dom0 to share the host's entropy with guests.

The interesting question here is what Linux guests do for entropy, especially on ARM where there's no RdRand instruction.

Access control

Traditional means of access control involve issuing users with passwords or X.509 client certificates, which they share with the software they're running. All requests sent by the client can then be authenticated as coming from them and approved based on some access control policy. This approach leads to all the well-known problems with traditional access control: the confused deputy problem, Cross-Site Request Forgery, Clickjacking, etc, so I want to avoid that kind of "ambient authority".

The previous diagram let us reason about how the different components within the unikernel could interact with each other, showing the possible (initial) interactions with arrows. Now I want to stretch arrows across the Internet, so I can reason in the same way about the larger distributed system that includes my queue service with the uploaders and downloaders.

Like C pointers, traditional web URLs do not give us what we want: a compromised CA anywhere in the world will allow an attacker to impersonate our service, and our URLs may be guessable. Instead, I decided to try a YURL:

"[...] the identifier MUST provide enough information to: locate the target site; authenticate the target site; and, if required, establish a private communication channel with the target site. A URL that meets these requirements is a YURL."

The latest version of this (draft) scheme I could find was some brief notes in HTTPSY (2014), which uses the format:

httpsy://algorithm:fingerprint@domain:port/path1/!redactedPath2/…

There are two parts we need to consider: how the client determines that it is connected to the real service, and how the service determines what the client can do.

To let the client authenticate the server without relying on the CA system, YURLs include a hash (fingerprint) of the server's public key. You can get the fingerprint of an X509 certificate like this:

$ openssl x509 -in server.pem -fingerprint -sha256 -noout

SHA256 Fingerprint=3F:27:2D:E6:D6:3D:7C:08:E0:E3:EF:02:A8:DA:9A:74:62:84:57:21:B4:72:39:FD:D0:72:0E:76:71:A5:E9:94

Base32-encoding shortens this to h4ts3zwwhv6aryhd54bkrwu2orriivzbwrzdt7oqoihhm4nf5gka.

Alternatively, to get the value with OCaml, use:

1

| |

To control what each user of the service can do, we give each user a unique YURL containing a Swiss number, which is like a password except that it applies only to a specific resource, not to the whole site.

The Swiss number comes after the ! in the URL, which indicates to browsers that it shouldn't be displayed, included in Referer headers, etc.

You can use any unguessably long random string here (I used pwgen 32 1).

After checking the server's fingerprint, the client requests the path with the Swiss number included.

Putting it all together, then, a sample URL to give to the downloader looks like this:

httpsy://sha256:h4ts3zwwhv6aryhd54bkrwu2orriivzbwrzdt7oqoihhm4nf5gka@10.0.0.2:8443/downloader/!eequuthieyexahzahShain0abeiwaej4

The old code for handling requests looked like this:

1 2 3 | |

This becomes:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

I hashed the Swiss number here so that the unikernel doesn't have to contain any secrets and I therefore don't have to worry about timing attacks. Even if the attacker knows the hash we're looking for, they still shouldn't be able to generate a URL which hashes to that value.

By giving each user of the service a different Swiss number we can keep records of who authorised each request and revoke access individually if needed (here the ~user:"Alice" indicates this is the uploader URL we gave to Alice).

Of course, the YURLs need to be sent to users securely too. In my case, the users already have known GPG keys, so I can just email them an encrypted version.

Python client

The downloader (0repo) is written in Python, so the next step was to check that it could still access the service. The Python SSL API was rather confusing, but this seems to work:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | |

Conclusions

MirageOS should allow us to build systems that are far more secure than traditional operating systems. By starting with isolated components and then connecting them together in a controlled way we can feel some confidence that our security goals will be met.

At the language level, OCaml's abstract types and functors make it easy to reason informally about how the components of our system will interact. Mirage passes values granting access to the outside world (disks, network cards, etc) to our unikernel's start function.

Our code can then delegate these permissions to the rest of the code in a controlled fashion.

For example, we can grant the queuing code access only to its part of the disk (and not the bit containing the TLS private key) by wrapping the disk in a partition functor.

Although OCaml doesn't actually prevent us from bypassing this system and accessing devices directly, code that does so would not be able to support the multiple different back-ends (e.g. Unix and Xen) that Mirage requires and so could not be written accidentally.

It should be possible for a static analysis tool to verify that modules don't do this.

Moving up a level from separating the components of our unikernel, Xen allows us to isolate multiple unikernels and other VMs running on a single physical host. Just as we interposed a disk partition between the queue and the disk within the unikernel, we can use Xen to interpose a firewall VM between the physical network device and our unikernel.

Finally, the use of transport layer security and YURLs allows us to continue this pattern of isolation to the level of networks, so that we can reason in the same way about distributed systems. My current code mixes the handling of YURLs with the existing application logic, but it should be possible to abstract this and make it reusable, so that remote services appear just like any local service. In many systems this is awkward because local APIs are used synchronously while remote ones are asynchronous, but in Mirage everything is non-blocking anyway, so there is no difference.

I feel I should put some kind of warning here about these very new security features not being ready for real use and how you should instead use mature, widely deployed systems such as Linux and OpenSSL. But really, can it be any worse?

If you've spotted any flaws in my reasoning or code, please add comments!

The code for this unikernel can be found on the tls branch at https://github.com/0install/0repo-queue/tree/tls.