The vchan protocol is used to stream data between virtual machines on a Xen host without needing any locks. It is largely undocumented. The TLA Toolbox is a set of tools for writing and checking specifications. In this post, I'll describe my experiences using these tools to understand how the vchan protocol works.

Table of Contents

- Background

- Is TLA useful?

- Basic TLA concepts

- The real vchan

- Experiences with TLAPS

- The final specification

- The original bug

- Conclusions

( this post also appeared on Reddit, Hacker News and Lobsters )

Background

Qubes and the vchan protocol

I run QubesOS on my laptop. A QubesOS desktop environment is made up of multiple virtual machines. A privileged VM, called dom0, provides the desktop environment and coordinates the other VMs. dom0 doesn't have network access, so you have to use other VMs for doing actual work. For example, I use one VM for email and another for development work (these are called "application VMs"). There is another VM (called sys-net) that connects to the physical network, and yet another VM (sys-firewall) that connects the application VMs to net-vm.

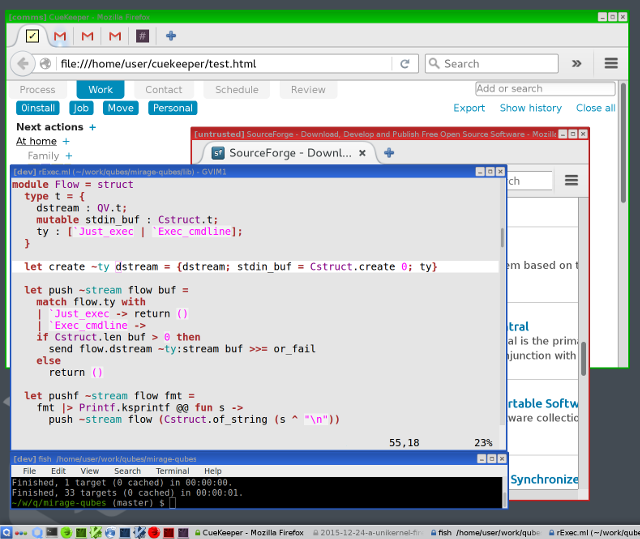

My QubesOS desktop. The windows with blue borders are from my Debian development VM, while the green one is from a Fedora VM, etc.

My QubesOS desktop. The windows with blue borders are from my Debian development VM, while the green one is from a Fedora VM, etc.

The default sys-firewall is based on Fedora Linux. A few years ago, I replaced sys-firewall with a MirageOS unikernel. MirageOS is written in OCaml, and has very little C code (unlike Linux). It boots much faster and uses much less RAM than the Fedora-based VM. But recently, a user reported that restarting mirage-firewall was taking a very long time. The problem seemed to be that it was taking several minutes to transfer the information about the network configuration to the firewall. This is sent over vchan. The user reported that stracing the QubesDB process in dom0 revealed that it was sleeping for 10 seconds between sending the records, suggesting that a wakeup event was missing.

The lead developer of QubesOS said:

I'd guess missing evtchn trigger after reading/writing data in vchan.

Perhaps ocaml-vchan, the OCaml implementation of vchan, wasn't implementing the vchan specification correctly? I wanted to check, but there was a problem: there was no vchan specification.

The Xen wiki lists vchan under Xen Document Days/TODO. The initial Git commit on 2011-10-06 said:

libvchan: interdomain communications library

This library implements a bidirectional communication interface between applications in different domains, similar to unix sockets. Data can be sent using the byte-oriented

libvchan_read/libvchan_writeor the packet-orientedlibvchan_recv/libvchan_send.Channel setup is done using a client-server model; domain IDs and a port number must be negotiated prior to initialization. The server allocates memory for the shared pages and determines the sizes of the communication rings (which may span multiple pages, although the default places rings and control within a single page).

With properly sized rings, testing has shown that this interface provides speed comparable to pipes within a single Linux domain; it is significantly faster than network-based communication.

I looked in the xen-devel mailing list around this period in case the reviewers had asked about how it worked.

One reviewer suggested:

Please could you say a few words about the functionality this new library enables and perhaps the design etc? In particular a protocol spec would be useful for anyone who wanted to reimplement for another guest OS etc. [...] I think it would be appropriate to add protocol.txt at the same time as checking in the library.

However, the submitter pointed out that this was unnecessary, saying:

The comments in the shared header file explain the layout of the shared memory regions; any other parts of the protocol are application-defined.

Now, ordinarily, I wouldn't be much interested in spending my free time tracking down race conditions in 3rd-party libraries for the benefit of strangers on the Internet. However, I did want to have another play with TLA...

TLA+

TLA+ is a language for specifying algorithms. It can be used for many things, but it is particularly designed for stateful parallel algorithms.

I learned about TLA while working at Docker. Docker EE provides software for managing large clusters of machines. It includes various orchestrators (SwarmKit, Kubernetes and Swarm Classic) and a web UI. Ensuring that everything works properly is very important, and to this end a large collection of tests had been produced. Part of my job was to run these tests. You take a test from a list in a web UI and click whatever buttons it tells you to click, wait for some period of time, and then check that what you see matches what the test says you should see. There were a lot of these tests, and they all had to be repeated on every supported platform, and for every release, release candidate or preview release. There was a lot of waiting involved and not much thinking required, so to keep my mind occupied, I started reading the TLA documentation.

I read The TLA+ Hyperbook and Specifying Systems. Both are by Leslie Lamport (the creator of TLA), and are freely available online. They're both very easy to read. The hyperbook introduces the tools right away so you can start playing, while Specifying Systems starts with more theory and discusses the tools later. I think it's worth reading both.

Once Docker EE 2.0 was released, we engineers were allowed to spend a week on whatever fun (Docker-related) project we wanted. I used the time to read the SwarmKit design documents and make a TLA model of that. I felt that using TLA prompted useful discussions with the SwarmKit developers (which can see seen in the pull request comments).

A specification document can answer questions such as:

- What does it do? (requirements / properties)

- How does it do it? (the algorithm)

- Does it work? (model checking)

- Why does it work? (inductive invariant)

- Does it really work? (proofs)

You don't have to answer all of them to have a useful document, but I will try to answer each of them for vchan.

Is TLA useful?

In my (limited) experience with TLA, whenever I have reached the end of a specification (whether reading it or writing it), I always find myself thinking "Well, that was obvious. It hardly seems worth writing a spec for that!". You might feel the same after reading this blog post.

To judge whether TLA is useful, I suggest you take a few minutes to look at the code. If you are good at reading C code then you might find, like the Xen reviewers, that it is quite obvious what it does, how it works, and why it is correct. Or, like me, you might find you'd prefer a little help. You might want to jot down some notes about it now, to see whether you learn anything new.

To give the big picture:

- Two VMs decide to communicate over vchan. One will be the server and the other the client.

- The server allocates three chunks of memory: one to hold data in transit from the client to the server, one for data going from server to client, and the third to track information about the state of the system. This includes counters saying how much data has been written and how much read, in each direction.

- The server tells Xen to grant the client access to this memory.

- The client asks Xen to map the memory into its address space. Now client and server can both access it at once. There are no locks in the protocol, so be careful!

- Either end sends data by writing it into the appropriate buffer and updating the appropriate counter in the shared block. The buffers are ring buffers, so after getting to the end, you start again from the beginning.

- The data-written (producer) counter and the data-read (consumer) counter together tell you how much data is in the buffer, and where it is. When the difference is zero, the reader must stop reading and wait for more data. When the difference is the size of the buffer, the writer must stop writing and wait for more space.

- When one end is waiting, the other can signal it using a Xen event channel. This essentially sets a pending flag to true at the other end, and wakes the VM if it is sleeping. If a VM tries to sleep while it has an event pending, it will immediately wake up again. Sending an event when one is already pending has no effect.

The public/io/libxenvchan.h header file provides some information, including the shared structures and comments about them:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | |

You might also like to look at the vchan source code.

Note that the libxenvchan.h file in this directory includes and extends

the above header file (with the same name).

For this blog post, we will ignore the Xen-specific business of sharing the memory and telling the client where it is, and assume that the client has mapped the memory and is ready to go.

Basic TLA concepts

We'll take a first look at TLA concepts and notation using a simplified version of vchan. TLA comes with excellent documentation, so I won't try to make this a full tutorial, but hopefully you will be able to follow the rest of this blog post after reading it. We will just consider a single direction of the channel (e.g. client-to-server) here.

Variables, states and behaviour

A variable in TLA is just what a programmer expects: something that changes over time.

For example, I'll use Buffer to represent the data currently being transmitted.

We can also add variables that are just useful for the specification.

I use Sent to represent everything the sender-side application asked the vchan library to transmit,

and Got for everything the receiving application has received:

1

| |

A state in TLA represents a snapshot of the world at some point.

It gives a value for each variable.

For example, { Got: "H", Buffer: "i", Sent: "Hi", ... } is a state.

The ... is just a reminder that a state also includes everything else in the world,

not just the variables we care about.

Here are some more states:

| State | Got | Buffer | Sent |

|---|---|---|---|

| s0 | |||

| s1 | H | H | |

| s2 | H | H | |

| s3 | H | i | Hi |

| s4 | Hi | Hi | |

| s5 | iH | Hi |

A behaviour is a sequence of states, representing some possible history of the world.

For example, << s0, s1, s2, s3, s4 >> is a behaviour.

So is << s0, s1, s5 >>, but not one we want.

The basic idea in TLA is to specify precisely which behaviours we want and which we don't want.

A state expression is an expression that can be evaluated in the context of some state.

For example, this defines Integrity to be a state expression that is true whenever what we have got

so far matches what we wanted to send:

1 2 3 4 5 6 7 8 | |

Integrity is true for all the states above except for s5.

I added some helper operators Take and Drop here.

Sequences in TLA+ can be confusing because they are indexed from 1 rather than from 0,

so it is easy to make off-by-one errors.

These operators just use lengths, which we can all agree on.

In Python syntax, it would be written something like:

1 2 | |

A temporal formula is an expression that is evaluated in the context of a complete behaviour. It can use the temporal operators, which include:

[](that's supposed to look like a square) : "always"<>(that's supposed to look like a diamond) : "eventually"

[] F is true if the expression F is true at every point in the behaviour.

<> F is true if the expression F is true at any point in the behaviour.

Messages we send should eventually arrive. Here's one way to express that:

1 2 3 | |

TLA syntax is a bit odd. It's rather like LaTeX (which is not surprising: Lamport is also the "La" in LaTeX).

\A means "for all" (rendered as an upside-down A).

So this says that for every number x, it is always true that if we have sent x bytes then

eventually we will have received at least x bytes.

This pattern of [] (F => <>G) is common enough that it has a shorter notation of F ~> G, which

is read as "F (always) leads to G". So, Availability can also be written as:

1 2 3 | |

We're only checking the lengths in Availability, but combined with Integrity that's enough to ensure

that we eventually receive what we want.

So ideally, we'd like to ensure that every possible behaviour of the vchan library will satisfy

the temporal formula Properties:

1 2 | |

That /\ is "and" by the way, and \/ is "or".

I did eventually start to be able to tell one from the other, though I still think && and || would be easier.

In case I forget to explain some syntax, A Summary of TLA lists most of it.

Actions

It is hopefully easy to see that Properties defines properties we want.

A user of vchan would be happy to see that these are things they can rely on.

But they don't provide much help to someone trying to implement vchan.

For that, TLA provides another way to specify behaviours.

An action in TLA is an expression that is evaluated in the context of a pair of states, representing a single atomic step of the system. For example:

1 2 3 4 5 | |

The Read action is true of a step if that step transfers all the data from Buffer to Got.

Unprimed variables (e.g. Buffer) refer to the current state and primed ones (e.g. Buffer')

refer to the next state.

There's some more strange notation here too:

- We're using

/\to form a bulleted list here rather than as an infix operator. This is indentation-sensitive. TLA also supports\/lists in the same way. \ois sequence concatenation (+in Python).<< >>is the empty sequence ([ ]in Python).UNCHANGED SentmeansSent' = Sent.

In Python, it might look like this:

1 2 3 4 5 | |

Actions correspond more closely to code than temporal formulas, because they only talk about how the next state is related to the current one.

This action only allows one thing: reading the whole buffer at once. In the C implementation of vchan the receiving application can provide a buffer of any size and the library will read at most enough bytes to fill the buffer. To model that, we will need a slightly more flexible version:

1 2 3 4 5 | |

This says that a step is a Read step if there is any n (in the range 1 to the length of the buffer)

such that we transferred n bytes from the buffer. \E means "there exists ...".

A Write action can be defined in a similar way:

1 2 3 4 5 6 7 8 9 | |

A CONSTANT defines a parameter (input) of the specification

(it's constant in the sense that it doesn't change between states).

A Write operation adds some message m to the buffer, and also adds a copy of it to Sent

so we can talk about what the system is doing.

Seq(Byte) is the set of all possible sequences of bytes,

and \ {<< >>} just excludes the empty sequence.

A step of the combined system is either a Read step or a Write step:

1 2 | |

We also need to define what a valid starting state for the algorithm looks like:

1 2 3 4 | |

Finally, we can put all this together to get a temporal formula for the algorithm:

1 2 3 4 | |

Some more notation here:

[Next]_vars(that'sNextin brackets with a subscriptvars) meansNext \/ UNCHANGED vars.- Using

Init(a state expression) in a temporal formula means it must be true for the first state of the behaviour. [][Action]_varsmeans that[Action]_varsmust be true for each step.

TLA syntax requires the _vars subscript here.

This is because other things can be going on in the world beside our algorithm,

so it must always be possible to take a step without our algorithm doing anything.

Spec defines behaviours just like Properties does,

but in a way that makes it more obvious how to implement the protocol.

Correctness of Spec

Now we have definitions of Spec and Properties,

it makes sense to check that every behaviour of Spec satisfies Properties.

In Python terms, we want to check that all behaviours b satisfy this:

1 2 | |

i.e. either b isn't a behaviour that could result from the actions of our algorithm or,

if it is, it satisfies Properties. In TLA notation, we write this as:

1 2 | |

It's OK if a behaviour is allowed by Properties but not by Spec.

For example, the behaviour which goes straight from Got="", Sent="" to

Got="Hi", Sent="Hi" in one step meets our requirements, but it's not a

behaviour of Spec.

The real implementation may itself further restrict Spec.

For example, consider the behaviour << s0, s1, s2 >>:

| State | Got | Buffer | Sent |

|---|---|---|---|

| s0 | Hi | Hi | |

| s1 | H | i | Hi |

| s2 | Hi | Hi |

The sender sends two bytes at once, but the reader reads them one at a time. This is a behaviour of the C implementation, because the reading application can ask the library to read into a 1-byte buffer. However, it is not a behaviour of the OCaml implementation, which gets to choose how much data to return to the application and will return both bytes together.

That's fine.

We just need to show that OCamlImpl => Spec and Spec => Properties and we can deduce that

OCamlImpl => Properties.

This is, of course, the key purpose of a specification:

we only need to check that each implementation implements the specification,

not that each implementation directly provides the desired properties.

It might seem strange that an implementation doesn't have to allow all the specified behaviours.

In fact, even the trivial specification Spec == FALSE is considered to be a correct implementation of Properties,

because it has no bad behaviours (no behaviours at all).

But that's OK.

Once the algorithm is running, it must have some behaviour, even if that behaviour is to do nothing.

As the user of the library, you are responsible for checking that you can use it

(e.g. by ensuring that the Init conditions are met).

An algorithm without any behaviours corresponds to a library you could never use,

not to one that goes wrong once it is running.

The model checker

Now comes the fun part: we can ask TLC (the TLA model checker) to check that Spec => Properties.

You do this by asking the toolbox to create a new model (I called mine SpecOK) and setting Spec as the

"behaviour spec". It will prompt for a value for BufferSize. I used 2.

There will be various things to fix up:

- To check

Write, TLC first tries to get every possibleSeq(Byte), which is an infinite set. I definedMSG == Seq(Byte)and changedWriteto useMSG. I then added an alternative definition forMSGin the model so that we only send messages of limited length. In fact, my replacementMSGensures thatSentwill always just be an incrementing sequence (<< 1, 2, 3, ... >>). That's enough to checkProperties, and much quicker than checking every possible message. - The system can keep sending forever. I added a state constraint to the model:

Len(Sent) < 4This tells TLC to stop considering any execution once this becomes false.

With that, the model runs successfully. This is a nice feature of TLA: instead of changing our specification to make it testable, we keep the specification correct and just override some aspects of it in the model. So, the specification says we can send any message, but the model only checks a few of them.

Now we can add Integrity as an invariant to check.

That passes, but it's good to double-check by changing the algorithm.

I changed Read so that it doesn't clear the buffer, using Buffer' = Drop(Buffer, 0)

(with 0 instead of n).

Then TLC reports a counter-example ("Invariant Integrity is violated"):

- The sender writes

<< 1, 2 >>toBuffer. - The reader reads one byte, to give

Got=1, Buffer=12, Sent=12. - The reader reads another byte, to give

Got=11, Buffer=12, Sent=12.

Looks like it really was checking what we wanted.

It's good to be careful. If we'd accidentally added Integrity as a "property" to check rather than

as an "invariant" then it would have interpreted it as a temporal formula and reported success just because

it is true in the initial state.

One really nice feature of TLC is that (unlike a fuzz tester) it does a breadth-first search and therefore

finds minimal counter-examples for invariants.

The example above is therefore the quickest way to violate Integrity.

Checking Availability complains because of the use of Nat (we're asking it to check for every possible

length).

I replaced the Nat with AvailabilityNat and overrode that to be 0..4 in the model.

It then complains "Temporal properties were violated" and shows an example where the sender wrote

some data and the reader never read it.

The problem is, [Next]_vars always allows us to do nothing.

To fix this, we can specify a "weak fairness" constraint.

WF_vars(action), says that we can't just stop forever with action being always possible but never happening.

I updated Spec to require the Read action to be fair:

1

| |

Again, care is needed here.

If we had specified WF_vars(Next) then we would be forcing the sender to keep sending forever, which users of vchan are not required to do.

Worse, this would mean that every possible behaviour of the system would result in Sent growing forever.

Every behaviour would therefore hit our Len(Sent) < 4 constraint and

TLC wouldn't consider it further.

That means that TLC would never check any actual behaviour against Availability,

and its reports of success would be meaningless!

Changing Read to require n \in 2..Len(Buffer) is a quick way to see that TLC is actually checking Availability.

Here's the complete spec so far: vchan1.pdf (source)

The real vchan

The simple Spec algorithm above has some limitations.

One obvious simplification is that Buffer is just the sequence of bytes in transit, whereas in the real system it is a ring buffer, made up of an array of bytes along with the producer and consumer counters.

We could replace it with three separate variables to make that explicit.

However, ring buffers in Xen are well understood and I don't feel that it would make the specification any clearer

to include that.

A more serious problem is that Spec assumes that there is a way to perform the Read and Write operations atomically.

Otherwise the real system would have behaviours not covered by the spec.

To implement the above Spec correctly, you'd need some kind of lock.

The real vchan protocol is more complicated than Spec, but avoids the need for a lock.

The real system has more shared state than just Buffer.

I added extra variables to the spec for each item of shared state in the C code, along with its initial value:

SenderLive = TRUE(sender sets to FALSE to close connection)ReceiverLive = TRUE(receiver sets to FALSE to close connection)NotifyWrite = TRUE(receiver wants to be notified of next write)DataReadyInt = FALSE(sender has signalled receiver over event channel)NotifyRead = FALSE(sender wants to be notified of next read)SpaceAvailableInt = FALSE(receiver has notified sender over event channel)

DataReadyInt represents the state of the receiver's event port.

The sender can make a Xen hypercall to set this and wake (or interrupt) the receiver.

I guess sending these events is somewhat slow,

because the NotifyWrite system is used to avoid sending events unnecessarily.

Likewise, SpaceAvailableInt is the sender's event port.

The algorithm

Here is my understanding of the protocol. On the sending side:

- The sending application asks to send some bytes.

We check whether the receiver has closed the channel and abort if so. - We check the amount of buffer space available.

- If there isn't enough, we set

NotifyReadso the receiver will notify us when there is more.

We also check the space again after this, in case it changed while setting the flag. - If there is any space:

- We write as much data as we can to the buffer.

- If the

NotifyWriteflag is set, we clear it and notify the receiver of the write.

- If we wrote everything, we return success.

- Otherwise, we wait to be notified of more space.

- We check whether the receiver has closed the channel.

If so we abort. Otherwise, we go back to step 2.

On the receiving side:

- The receiving application asks us to read up to some amount of data.

- We check the amount of data available in the buffer.

- If there isn't as much as requested, we set

NotifyWriteso the sender will notify us when there is.

We also check the space again after this, in case it changed while setting the flag. - If there is any data, we read up to the amount requested.

If theNotifyReadflag is set, we clear it and notify the sender of the new space.

We return success to the application (even if we didn't get as much as requested). - Otherwise (if there was no data), we check whether the sender has closed the connection.

- If not (if the connection is still open), we wait to be notified of more data, and then go back to step 2.

Either side can close the connection by clearing their "live" flag and signalling the other side. I assumed there is also some process-local way that the close operation can notify its own side if it's currently blocked.

To make expressing this kind of step-by-step algorithm easier, TLA+ provides a programming-language-like syntax called PlusCal. It then translates PlusCal into TLA actions.

Confusingly, there are two different syntaxes for PlusCal: Pascal style and C style. This means that, when you search for examples on the web, there is a 50% chance they won't work because they're using the other flavour. I started with the Pascal one because that was the first example I found, but switched to C-style later because it was more compact.

Here is my attempt at describing the sender algorithm above in PlusCal:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | |

The labels (e.g. sender_request_notify:) represent points in the program where other actions can happen.

Everything between two labels is considered to be atomic.

I checked that every block of code between labels accesses only one shared variable.

This means that the real system can't see any states that we don't consider.

The toolbox doesn't provide any help with this; you just have to check manually.

The sender_ready label represents a state where the client application hasn't yet decided to send any data.

Its label is tagged with - to indicate that fairness doesn't apply here, because the protocol doesn't

require applications to keep sending more data forever.

The other steps are fair, because once we've decided to send something we should keep going.

Taking a step from sender_ready to sender_write corresponds to the vchan library's write function

being called with some argument m.

The with (m \in MSG) says that m could be any message from the set MSG.

TLA also contains a CHOOSE operator that looks like it might do the same thing, but it doesn't.

When you use with, you are saying that TLC should check all possible messages.

When you use CHOOSE, you are saying that it doesn't matter which message TLC tries (and it will always try the

same one).

Or, in terms of the specification, a CHOOSE would say that applications can only ever send one particular message, without telling you what that message is.

In sender_write_data, we set free := 0 for no obvious reason.

This is just to reduce the number of states that the model checker needs to explore,

since we don't care about its value after this point.

Some of the code is a little awkward because I had to put things in else branches that would more naturally go after the whole if block, but the translator wouldn't let me do that.

The use of semi-colons is also a bit confusing: the PlusCal-to-TLA translator requires them after a closing brace in some places, but the PDF generator messes up the indentation if you include them.

Here's how the code block starting at sender_request_notify gets translated into a TLA action:

1 2 3 4 5 6 7 8 9 10 11 | |

pc is a mapping from process ID to the label where that process is currently executing.

So sender_request_notify can only be performed when the SenderWriteID process is

at the sender_request_notify label.

Afterwards pc[SenderWriteID] will either be at sender_write_data or sender_recheck_len

(if there wasn't enough space for the whole message).

Here's the code for the receiver:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | |

It's quite similar to before.

recv_ready corresponds to a state where the application hasn't yet called read.

When it does, we take n (the maximum number of bytes to read) as an argument and

store it in the local variable want.

Note: you can use the C library in blocking or non-blocking mode.

In blocking mode, a write (or read) waits until data is sent (or received).

In non-blocking mode, it returns a special code to the application indicating that it needs to wait.

The application then does the waiting itself and then calls the library again.

I think the specification above covers both cases, depending on whether you think of

sender_blocked and recv_await_data as representing code inside or outside of the library.

We also need a way to close the channel. It wasn't clear to me, from looking at the C headers, when exactly you're allowed to do that. I think that if you had a multi-threaded program and you called the close function while the write function was blocked, it would unblock and return. But if you happened to call it at the wrong time, it would try to use a closed file descriptor and fail (or read from the wrong one). So I guess it's single threaded, and you should use the non-blocking mode if you want to cancel things.

That means that the sender can close only when it is at sender_ready or sender_blocked,

and similarly for the receiver.

The situation with the OCaml code is the same, because it is cooperatively threaded and so the close

operation can only be called while blocked or idle.

However, I decided to make the specification more general and allow for closing at any point

by modelling closing as separate processes:

1 2 3 4 5 6 7 8 9 | |

Again, the processes are "fair" because once we start closing we should finish, but the initial labels are tagged with "-" to disable fairness there: it's OK if you keep a vchan open forever.

There's a slight naming problem here. The PlusCal translator names the actions it generates after the starting state of the action. So sender_open is the action that moves from the sender_open label. That is, the sender_open action actually closes the connection!

Finally, we share the event channel with the buffer going in the other direction, so we might get notifications that are nothing to do with us. To ensure we handle that, I added another process that can send events at any time:

1 2 3 4 5 6 | |

either/or says that we need to consider both possibilities.

This process isn't marked fair, because we can't rely these interrupts coming.

But we do have to handle them when they happen.

Testing the full spec

PlusCal code is written in a specially-formatted comment block, and you have to press Ctrl-T to generate (or update) then TLA translation before running the model checker.

Be aware that the TLA Toolbox is a bit unreliable about keyboard short-cuts. While typing into the editor always works, short-cuts such as Ctrl-S (save) sometimes get disconnected. So you think you're doing "edit/save/translate/save/check" cycles, but really you're just checking some old version over and over again. You can avoid this by always running the model checker with the keyboard shortcut too, since that always seems to fail at the same time as the others. Focussing a different part of the GUI and then clicking back in the editor again fixes everything for a while.

Anyway, running our model on the new spec shows that Integrity is still OK.

However, the Availability check fails with the following counter-example:

- The sender writes

<< 1 >>toBuffer. - The sender closes the connection.

- The receiver closes the connection.

- All processes come to a stop, but the data never arrived.

We need to update Availability to consider the effects of closing connections.

And at this point, I'm very unsure what vchan is intended to do.

We could say:

1 2 3 4 5 | |

That passes. But vchan describes itself as being like a Unix socket. If you write to a Unix socket and then close it, you still expect the data to be delivered. So actually I tried this:

1 2 3 4 5 | |

This says that if a sender write operation completes successfully (we're back at sender_ready)

and at that point the sender hasn't closed the connection, then the receiver will eventually receive

the data (or close its end).

That is how I would expect it to behave. But TLC reports that the new spec does not satisfy this, giving this example (simplified - there are 16 steps in total):

- The receiver starts reading. It finds that the buffer is empty.

- The sender writes some data to

Bufferand returns tosender_ready. - The sender closes the channel.

- The receiver sees that the connection is closed and stops.

Is this a bug? Without a specification, it's impossible to say.

Maybe vchan was never intended to ensure delivery once the sender has closed its end.

But this case only happens if you're very unlucky about the scheduling.

If the receiving application calls read when the sender has closed the connection but there is data

available then the C code does return the data in that case.

It's only if the sender happens to close the connection just after the receiver has checked the buffer and just before it checks the close flag that this happens.

It's also easy to fix. I changed the code in the receiver to do a final check on the buffer before giving up:

1 2 3 4 | |

With that change, we can be sure that data sent while the connection is open will always be delivered (provided only that the receiver doesn't close the connection itself). If you spotted this issue yourself while you were reviewing the code earlier, then well done!

Note that when TLC finds a problem with a temporal property (such as Availability),

it does not necessarily find the shortest example first.

I changed the limit on Sent to Len(Sent) < 2 and added an action constraint of ~SpuriousInterrupts

to get a simpler example, with only 1 byte being sent and no spurious interrupts.

Some odd things

I noticed a couple of other odd things, which I thought I'd mention.

First, NotifyWrite is initialised to TRUE, which seemed unnecessary.

We can initialise it to FALSE instead and everything still works.

We can even initialise it with NotifyWrite \in {TRUE, FALSE} to allow either behaviour,

and thus test that old programs that followed the original version of the spec still work

with either behaviour.

That's a nice advantage of using a specification language. Saying "the code is the spec" becomes less useful as you build up more and more versions of the code!

However, because there was no spec before, we can't be sure that existing programs do follow it. And, in fact, I found that QubesDB uses the vchan library in a different and unexpected way. Instead of calling read, and then waiting if libvchan says to, QubesDB blocks first in all cases, and then calls the read function once it gets an event.

We can document that by adding an extra step at the start of ReceiverRead:

1 2 3 4 5 | |

Then TLC shows that NotifyWrite cannot start as FALSE.

The second odd thing is that the receiver sets NotifyRead whenever there isn't enough data available

to fill the application's buffer completely.

But usually when you do a read operation you just provide a buffer large enough for the largest likely message.

It would probably make more sense to set NotifyWrite only when the buffer is completely empty.

After checking the current version of the algorithm, I changed the specification to allow either behaviour.

Why does vchan work?

At this point, we have specified what vchan should do and how it does it. We have also checked that it does do this, at least for messages up to 3 bytes long with a buffer size of 2. That doesn't sound like much, but we still checked 79,288 distinct states, with behaviours up to 38 steps long. This would be a perfectly reasonable place to declare the specification (and blog post) finished.

However, TLA has some other interesting abilities. In particular, it provides a very interesting technique to help discover why the algorithm works.

We'll start with Integrity.

We would like to argue as follows:

Integrityis true in any initial state (i.e.Init => Integrity).- Any

Nextstep preservesIntegrity(i.e.Integrity /\ Next => Integrity').

Then it would just be a matter looking at each possible action that makes up Next and

checking that each one individually preserves Integrity.

However, we can't do this with Integrity because (2) isn't true.

For example, the state { Got: "", Buffer: "21", Sent: "12" } satisfies Integrity,

but if we take a read step then the new state won't.

Instead, we have to argue "If we take a Next step in any reachable state then Integrity'",

but that's very difficult because how do we know whether a state is reachable without searching them all?

So the idea is to make a stronger version of Integrity, called IntegrityI, which does what we want.

IntegrityI is called an inductive invariant.

The first step is fairly obvious - I began with:

1 2 | |

Integrity just said that Got is a prefix of Sent.

This says specifically that the rest is Buffer \o msg - the data currently being transmitted and the data yet to be transmitted.

We can ask TLC to check Init /\ [][Next]_vars => []IntegrityI to check that it is an invariant, as before.

It does that by finding all the Init states and then taking Next steps to find all reachable states.

But we can also ask it to check IntegrityI /\ [][Next]_vars => []IntegrityI.

That is, the same thing but starting from any state matching IntegrityI instead of Init.

I created a new model (IntegrityI) to do that.

It reports a few technical problems at the start because it doesn't know the types of anything.

For example, it can't choose initial values for SenderLive without knowing that SenderLive is a boolean.

I added a TypeOK state expression that gives the expected type of every variable:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

We also need to tell it all the possible states of pc (which says which label each process it at):

1 2 3 4 5 6 7 8 9 10 11 | |

You might imagine that the PlusCal translator would generate that for you, but it doesn't.

We also need to override MESSAGE with FINITE_MESSAGE(n) for some n (I used 2).

Otherwise, it can't enumerate all possible messages.

Now we have:

1 2 3 4 | |

With that out of the way, TLC starts finding real problems

(that is, examples showing that IntegrityI /\ Next => IntegrityI' isn't true).

First, recv_read_data would do an out-of-bounds read if have = 1 and Buffer = << >>.

Our job is to explain why that isn't a valid state.

We can fix it with an extra constraint:

1 2 3 4 5 | |

(note: that => is "implies", while the <= is "less-than-or-equal-to")

Now it complains that if we do recv_got_len with Buffer = << >>, have = 1, want = 0 then we end up in recv_read_data with

Buffer = << >>, have = 1, and we have to explain why that can't happen and so on.

Because TLC searches breadth-first, the examples it finds never have more than 2 states. You just have to explain why the first state can't happen in the real system. Eventually, you get a big ugly pile of constraints, which you then think about for a bit and simply. I ended up with:

1 2 3 4 5 6 7 8 9 10 | |

It's a good idea to check the final IntegrityI with the original SpecOK model,

just to check it really is an invariant.

So, in summary, Integrity is always true because:

-

Sentis always the concatenation ofGot,Bufferandmsg. That's fairly obvious, becausesender_readysetsmsgand appends the same thing toSent, and the other steps (sender_write_dataandrecv_read_data) just transfer some bytes from the start of one variable to the end of another. -

Although, like all local information, the receiver's

havevariable might be out-of-date, there must be at least that much data in the buffer, because the sender process will only have added more, not removed any. This is sufficient to ensure that we never do an out-of-range read. -

Likewise, the sender's

freevariable is a lower bound on the true amount of free space, because the receiver only ever creates more space. We will therefore never write beyond the free space.

I think this ability to explain why an algorithm works, by being shown examples where the inductive property doesn't hold, is a really nice feature of TLA. Inductive invariants are useful as a first step towards writing a proof, but I think they're valuable even on their own. If you're documenting your own algorithm, this process will get you to explain your own reasons for believing it works (I tried it on a simple algorithm in my own code and it seemed helpful).

Some notes:

-

Originally, I had the

freeandhaveconstraints depending onpc. However, the algorithm sets them to zero when not in use so it turns out they're always true. -

IntegrityImatches 532,224 states, even with a maximumSentlength of 1, but it passes! There are some games you can play to speed things up; see Using TLC to Check Inductive Invariance for some suggestions (I only discovered that while writing this up).

Proving Integrity

TLA provides a syntax for writing proofs, and integrates with TLAPS (the TLA+ Proof System) to allow them to be checked automatically.

Proving IntegrityI is just a matter of showing that Init => IntegrityI and that it is preserved

by any possible [Next]_vars step.

To do that, we consider each action of Next individually, which is long but simple enough.

I was able to prove it, but the recv_read_data action was a little difficult

because we don't know that want > 0 at that point, so we have to do some extra work

to prove that transferring 0 bytes works, even though the real system never does that.

I therefore added an extra condition to IntegrityI that want is non-zero whenever it's in use,

and also conditions about have and free being 0 when not in use, for completeness:

1 2 3 4 5 6 7 8 9 10 | |

Availability

Integrity was quite easy to prove, but I had more trouble trying to explain Availability.

One way to start would be to add Availability as a property to check to the IntegrityI model.

However, it takes a while to check properties as it does them at the end, and the examples

it finds may have several steps (it took 1m15s to find a counter-example for me).

Here's a faster way (37s).

The algorithm will deadlock if both sender and receiver are in their blocked states and neither

interrupt is pending, so I made a new invariant, I, which says that deadlock can't happen:

1 2 3 4 5 6 | |

I discovered some obvious facts about closing the connection.

For example, the SenderLive flag is set if and only if the sender's close thread hasn't done anything.

I've put them all together in CloseOK:

1 2 3 4 5 6 7 8 9 10 11 12 | |

But I had problems with other examples TLC showed me, and I realised that I didn't actually know why this algorithm doesn't deadlock.

Intuitively it seems clear enough: the sender puts data in the buffer when there's space and notifies the receiver, and the receiver reads it and notifies the writer. What could go wrong? But both processes are working with information that can be out-of-date. By the time the sender decides to block because the buffer looked full, the buffer might be empty. And by the time the receiver decides to block because it looked empty, it might be full.

Maybe you already saw why it works from the C code, or the algorithm above, but it took me a while to figure it out! I eventually ended up with an invariant of the form:

1 2 3 4 | |

SendMayBlock is TRUE if we're in a state that may lead to being blocked without checking the

buffer's free space again. Likewise, ReceiveMayBlock indicates that the receiver might block.

SpaceWakeupComing and DataWakeupComing predict whether we're going to get an interrupt.

The idea is that if we're going to block, we need to be sure we'll be woken up.

It's a bit ugly, though, e.g.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

It did pass my model that tested sending one byte, and I decided to try a proof.

Well, it didn't work.

The problem seems to be that DataWakeupComing and SpaceWakeupComing are really mutually recursive.

The reader will wake up if the sender wakes it, but the sender might be blocked, or about to block.

That's OK though, as long as the receiver will wake it, which it will do, once the sender wakes it...

You've probably already figured it out, but I thought I'd document my confusion. It occurred to me that although each process might have out-of-date information, that could be fine as long as at any one moment one of them was right. The last process to update the buffer must know how full it is, so one of them must have correct information at any given time, and that should be enough to avoid deadlock.

That didn't work either.

When you're at a proof step and can't see why it's correct, you can ask TLC to show you an example.

e.g. if you're stuck trying to prove that sender_request_notify preserves I when the

receiver is at recv_ready, the buffer is full, and ReceiverLive = FALSE,

you can ask for an example of that:

1 2 3 4 5 6 7 | |

You then create a new model that searches Example /\ [][Next]_vars and tests I.

As long as Example has several constraints, you can use a much larger model for this.

I also ask it to check the property [][FALSE]_vars, which means it will show any step starting from Example.

It quickly became clear what was wrong: it is quite possible that neither process is up-to-date.

If both processes see the buffer contains X bytes of data, and the sender sends Y bytes and the receiver reads Z bytes, then the sender will think there are X + Y bytes in the buffer and the receiver will think there are X - Z bytes, and neither is correct.

My original 1-byte buffer was just too small to find a counter-example.

The real reason why vchan works is actually rather obvious.

I don't know why I didn't see it earlier.

But eventually it occurred to me that I could make use of Got and Sent.

I defined WriteLimit to be the total number of bytes that the sender would write before blocking,

if the receiver never did anything further.

And I defined ReadLimit to be the total number of bytes that the receiver would read if the sender

never did anything else.

Did I define these limits correctly?

It's easy to ask TLC to check some extra properties while it's running.

For example, I used this to check that ReadLimit behaves sensibly:

1 2 3 4 5 6 7 8 9 | |

Because ReadLimit is defined in terms of what it does when no other processes run,

this property should ideally be tested in a model without the fairness conditions

(i.e. just Init /\ [][Next]_vars).

Otherwise, fairness may force the sender to perform a step.

We still want to allow other steps, though, to show that ReadLimit is a lower bound.

With this, we can argue that e.g. a 2-byte buffer will eventually transfer 3 bytes:

- The receiver will eventually read 3 bytes as long as the sender eventually sends 3 bytes.

- The sender will eventually send 3, if the receiver reads at least 1.

- The receiver will read 1 if the sender sends at least 1.

- The sender will send 1 if the reader has read at least 0 bytes, which is always true.

By this point, I was learning to be more cautious before trying a proof, so I added some new models to check this idea further. One prevents the sender from ever closing the connection and the other prevents the receiver from ever closing. That reduces the number of states to consider and I was able to check a slightly larger model.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

If a process is on a path to being blocked then it must have set its notify flag.

NotifyFlagsCorrect says that in that case, the flag it still set, or the interrupt has been sent,

or the other process is just about to trigger the interrupt.

I managed to use that to prove that the sender's steps preserved I,

but I needed a little extra to finish the receiver proof.

At this point, I finally spotted the obvious invariant (which you, no doubt, saw all along):

whenever NotifyRead is still set, the sender has accurate information about the buffer.

1 2 3 4 5 | |

That's pretty obvious, isn't it? The sender checks the buffer after setting the flag, so it must have accurate information at that point. The receiver clears the flag after reading from the buffer (which invalidates the sender's information).

Now I had a dilemma.

There was obviously going to be a matching property about NotifyWrite.

Should I add that, or continue with just this?

I was nearly done, so I continued and finished off the proofs.

With I proved, I was able to prove some other nice things quite easily:

1 2 3 4 5 | |

That says that, whenever the sender is idle or blocked, the receiver will read everything sent so far, without any further help from the sender. And:

1 2 3 4 | |

That says that whenever the receiver is blocked, the sender can fill the buffer.

That's pretty nice.

It would be possible to make a vchan system that e.g. could only send 1 byte at a time and still

prove it couldn't deadlock and would always deliver data,

but here we have shown that the algorithm can use the whole buffer.

At least, that's what these theorems say as long as you believe that ReadLimit and WriteLimit are defined correctly.

With the proof complete, I then went back and deleted all the stuff about ReadLimit and WriteLimit from I

and started again with just the new rules about NotifyRead and NotifyWrite.

Instead of using WriteLimit = Len(Got) + BufferSize to indicate that the sender has accurate information,

I made a new SenderInfoAccurate that just returns TRUE whenever the sender will fill the buffer without further help.

That avoids some unnecessary arithmetic, which TLAPS needs a lot of help with.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

By talking about accuracy instead of the write limit, I was also able to include "Done" in with the other happy cases. Before, that had to be treated as a possible problem because the sender can't use the full buffer when it's Done.

With this change, the proof of Spec => []I became much simpler (384 lines shorter).

And most of the remaining steps were trivial.

The ReadLimit and WriteLimit idea still seemed useful, though,

but I found I was able to prove the same things from I.

e.g. we can still conclude this, even if I doesn't mention WriteLimit:

1 2 3 4 | |

That's nice, because it keeps the invariant and its proofs simple, but we still get the same result in the end.

I initially defined WriteLimit to be the number of bytes the sender could write if

the sending application wanted to send enough data,

but I later changed it to be the actual number of bytes it would write if the application didn't

try to send any more.

This is because otherwise, with packet-based sends

(where we only write when the buffer has enough space for the whole message at once)

WriteLimit could go down.

e.g. we think we can write another 3 bytes,

but then the application decides to write 10 bytes and now we can't write anything more.

The limit theorems above are useful properties,

but it would be good to have more confidence that ReadLimit and WriteLimit are correct.

I was able to prove some useful lemmas here.

First, ReceiverRead steps don't change ReadLimit (as long as the receiver hasn't closed

the connection):

1 2 3 | |

This gives us a good reason to think that ReadLimit is correct:

- When the receiver is blocked it cannot read any more than it has without help.

ReadLimitis defined to beLen(Got)then, soReadLimitis obviously correct for this case.- Since read steps preserve

ReadLimit, this shows that ReadLimit is correct in all cases.

e.g. if ReadLimit = 5 and no other processes do anything,

then we will end up in a state with the receiver blocked, and ReadLimit = Len(Got) = 5

and so we really did read a total of 5 bytes.

I was also able to prove that it never decreases (unless the receiver closes the connection):

1 2 3 | |

So, if ReadLimit = n then it will always be at least n,

and if the receiver ever blocks then it will have read at least n bytes.

I was able to prove similar properties about WriteLimit.

So, I feel reasonably confident that these limit predictions are correct.

Disappointingly, we can't actually prove Availability using TLAPS,

because currently it understands very little temporal logic (see TLAPS limitations).

However, I could show that the system can't deadlock while there's data to be transmitted:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | |

I've included the proof of DeadlockFree1 above:

- To show deadlock can't happen, it suffices to assume it has happened and show a contradiction.

- If both processes are blocked then

NotifyReadandNotifyWritemust both be set (because processes don't block without setting them, and if they'd been unset then an interrupt would now be pending and we wouldn't be blocked). - Since

NotifyReadis still set, the sender is correct in thinking that the buffer is still full. - Since

NotifyWriteis still set, the receiver is correct in thinking that the buffer is still empty. - That would be a contradiction, since

BufferSizeisn't zero.

If it doesn't deadlock, then some process must keep getting woken up by interrupts, which means that interrupts keep being sent. We only send interrupts after making progress (writing to the buffer or reading from it), so we must keep making progress. We'll have to content ourselves with that argument.

Experiences with TLAPS

The toolbox doesn't come with the proof system, so you need to install it separately. The instructions are out-of-date and have a lot of broken links. In May, I turned the steps into a Dockerfile, which got it partly installed, and asked on the TLA group for help, but no-one else seemed to know how to install it either. By looking at the error messages and searching the web for programs with the same names, I finally managed to get it working in December. If you have trouble installing it too, try using my Docker image.

Once installed, you can write a proof in the toolbox and then press Ctrl-G, Ctrl-G to check it. On success, the proof turns green. On failure, the failing step turns red. You can also do the Ctrl-G, Ctrl-G combination on a single step to check just that step. That's useful, because it's pretty slow. It takes more than 10 minutes to check the complete specification.

TLA proofs are done in the mathematical style, which is to write a set of propositions and vaguely suggest that thinking about these will lead you to the proof. This is good for building intuition, but bad for reproducibility. A mathematical proof is considered correct if the reader is convinced by it, which depends on the reader. In this case, the "reader" is a collection of automated theorem-provers with various timeouts. This means that whether a proof is correct or not depends on how fast your computer is, how many programs are currently running, etc. A proof might pass one day and fail the next. Some proof steps consistently pass when you try them individually, but consistently fail when checked as part of the whole proof. If a step fails, you need to break it down into smaller steps.

Sometimes the proof system is very clever, and immediately solves complex steps.

For example, here is the proof that the SenderClose process (which represents the sender closing the channel),

preserves the invariant I:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

A step such as IntegrityI' BY DEF IntegrityI says

"You can see that IntegrityI will be true in the next step just by looking at its definition".

So this whole lemma is really just saying "it's obvious".

And TLAPS agrees.

At other times, TLAPS can be maddeningly stupid. And it can't tell you what the problem is - it can only make things go red.

For example, this fails:

1 2 3 4 5 | |

We're trying to say that pc[2] is unchanged, given that pc' is the same as pc except that we changed pc[1].

The problem is that TLA is an untyped language.

Even though we know we did a mapping update to pc,

that isn't enough (apparently) to conclude that pc is in fact a mapping.

To fix it, you need:

1 2 3 4 5 6 | |

The extra pc \in [Nat -> STRING] tells TLA the type of the pc variable.

I found missing type information to be the biggest problem when doing proofs,

because you just automatically assume that the computer will know the types of things.

Another example:

1 2 3 4 5 | |

We're just trying to remove the x + ... from both sides of the equation.

The problem is, TLA doesn't know that Min(y, 10) is a number,

so it doesn't know whether the normal laws of addition apply in this case.

It can't tell you that, though - it can only go red.

Here's the solution:

1 2 3 4 5 | |

The BY DEF Min tells TLAPS to share the definition of Min with the solvers.

Then they can see that Min(y, 10) must be a natural number too and everything works.

Another annoyance is that sometimes it can't find the right lemma to use, even when you tell it exactly what it needs. Here's an extreme case:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | |

TransferFacts states some useful facts about transferring data between two variables.

You can prove that quite easily.

SameAgain is identical in every way, and just refers to TransferFacts for the proof.

But even with only one lemma to consider - one that matches all the assumptions and conclusions perfectly -

none of the solvers could figure this one out!

My eventual solution was to name the bundle of results. This works:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

Most of the art of using TLAPS is in controlling how much information to share with the provers.

Too little (such as failing to provide the definition of Min) and they don't have enough information to find the proof.

Too much (such as providing the definition of TransferResults) and they get overwhelmed and fail to find the proof.

It's all a bit frustrating, but it does work, and being machine checked does give you some confidence that your proofs are actually correct.

Another, perhaps more important, benefit of machine checked proofs is that when you decide to change something in the specification you can just ask it to re-check everything. Go and have a cup of tea, and when you come back it will have highlighted in red any steps that need to be updated. I made a lot of changes, and this worked very well.

The TLAPS philosophy is that

If you are concerned with an algorithm or system, you should not be spending your time proving basic mathematical facts. Instead, you should assert the mathematical theorems you need as assumptions or theorems.

So even if you can't find a formal proof of every step, you can still use TLAPS to break it down into steps than you either can prove, or that you think are obvious enough that they don't require a proof. However, I was able to prove everything I needed for the vchan specification within TLAPS.

The final specification

I did a little bit of tidying up at the end.

In particular, I removed the want variable from the specification.

I didn't like it because it doesn't correspond to anything in the OCaml implementation,

and the only place the algorithm uses it is to decide whether to set NotifyWrite,

which I thought might be wrong anyway.

I changed this:

1 2 | |

to:

1 2 3 4 5 6 | |

That always allows an implementation to set NotifyWrite if it wants to,

or to skip that step just as long as have > 0.

That covers the current C behaviour, my proposed C behaviour, and the OCaml implementation.

It also simplifies the invariant, and even made the proofs shorter!

I put the final specification online at spec-vchan. I also configured Travis CI to check all the models and verify all the proofs. That's useful because sometimes I'm too impatient to recheck everything on my laptop before pushing updates.

You can generate a PDF version of the specification with make pdfs.

Expressions there can be a little easier to read because they use proper symbols, but

it also breaks things up into pages, which is highly annoying.

It would be nice if it could omit the proofs too, as they're really only useful if you're trying to edit them.

I'd rather just see the statement of each theorem.

The original bug

With my new understanding of vchan, I couldn't see anything obvious wrong with the C code (at least, as long as you keep the connection open, which the firewall does).

I then took a look at ocaml-vchan. The first thing I noticed was that someone had commented out all the memory barriers, noting in the Git log that they weren't needed on x86. I am using x86, so that's not it, but I filed a bug about it anyway: Missing memory barriers.

The other strange thing I saw was the behaviour of the read function.

It claims to implement the Mirage FLOW interface, which says that read

"blocks until some data is available and returns a fresh buffer containing it".

However, looking at the code, what it actually does is to return a pointer directly into the shared buffer.

It then delays updating the consumer counter until the next call to read.

That's rather dangerous, and I filed another bug about that: Read has very surprising behaviour.

However, when I checked the mirage-qubes code, it just takes this buffer and makes a copy of it immediately.

So that's not the bug either.

Also, the original bug report mentioned a 10 second timeout, and neither the C implementation nor the OCaml one had any timeouts. Time to look at QubesDB itself.

QubesDB accepts messages from either the guest VM (the firewall) or from local clients connected over Unix domain sockets. The basic structure is:

1 2 3 4 5 6 | |

The suspicion was that we were missing a vchan event, but then it was discovering that there was data in the buffer anyway due to the timeout. Looking at the code, it does seem to me that there is a possible race condition here:

- A local client asks to send some data.

handle_client_datasends the data to the firewall using a blocking write.- The firewall sends a message to QubesDB at the same time and signals an event because the firewall-to-db buffer has data.

- QubesDB gets the event but ignores it because it's doing a blocking write and there's still no space in the db-to-firewall direction.

- The firewall updates its consumer counter and signals another event, because the buffer now has space.

- The blocking write completes and QubesDB returns to the main loop.

- QubesDB goes to sleep for 10 seconds, without checking the buffer.

I don't think this is the cause of the bug though,

because the only messages the firewall might be sending here are QDB_RESP_OK messages,

and QubesDB just discards such messages.

I managed to reproduce the problem myself,

and saw that in fact QubesDB doesn't make any progress due to the 10 second timeout.

It just tries to go back to sleep for another 10 seconds and then

immediately gets woken up by a message from a local client.

So, it looks like QubesDB is only sending updates every 10 seconds because its client, qubesd,

is only asking it to send updates every 10 seconds!

And looking at the qubesd logs, I saw stacktraces about libvirt failing to attach network devices, so

I read the Xen network device attachment specification to check that the firewall implemented that correctly.

I'm kidding, of course. There isn't any such specification. But maybe this blog post will inspire someone to write one...

Conclusions

As users of open source software, we're encouraged to look at the source code and check that it's correct ourselves. But that's pretty difficult without a specification saying what things are supposed to do. Often I deal with this by learning just enough to fix whatever bug I'm working on, but this time I decided to try making a proper specification instead. Making the TLA specification took rather a long time, but it was quite pleasant. Hopefully the next person who needs to know about vchan will appreciate it.

A TLA specification generally defines two sets of behaviours. The first is the set of desirable behaviours (e.g. those where the data is delivered correctly). This definition should clearly explain what users can expect from the system. The second defines the behaviours of a particular algorithm. This definition should make it easy to see how to implement the algorithm. The TLC model checker can check that the algorithm's behaviours are all acceptable, at least within some defined limits.

Writing a specification using the TLA notation forces us to be precise about what we mean. For example, in a prose specification we might say "data sent will eventually arrive", but in an executable TLA specification we're forced to clarify what happens if the connection is closed. I would have expected that if a sender writes some data and then closes the connection then the data would still arrive, but the C implementation of vchan does not always ensure that. The TLC model checker can find a counter-example showing how this can fail in under a minute.

To explain why the algorithm always works, we need to find an inductive invariant.

The TLC model checker can help with this,

by presenting examples of unreachable states that satisfy the invariant but don't preserve it after taking a step.

We must add constraints to explain why these states are invalid.

This was easy for the Integrity invariant, which explains why we never receive incorrect data, but

I found it much harder to prove that the system cannot deadlock.

I suspect that the original designer of a system would find this step easy, as presumably they already know why it works.

Once we have found an inductive invariant, we can write a formal machine-checked proof that the invariant is always true. Although TLAPS doesn't allow us to prove liveness properties directly, I was able to prove various interesting things about the algorithm: it doesn't deadlock; when the sender is blocked, the receiver can read everything that has been sent; and when the receiver is blocked, the sender can fill the entire buffer.

Writing formal proofs is a little tedious, largely because TLA is an untyped language. However, there is nothing particularly difficult about it, once you know how to work around various limitations of the proof checkers.

You might imagine that TLA would only work on very small programs like libvchan, but this is not the case.

It's just a matter of deciding what to specify in detail.

For example, in this specification I didn't give any details about how ring buffers work,

but instead used a single Buffer variable to represent them.

For a specification of a larger system using vchan, I would model each channel using just Sent and Got

and an action that transferred some of the difference on each step.

The TLA Toolbox has some rough edges. The ones I found most troublesome were: the keyboard shortcuts frequently stop working; when a temporal property is violated, it doesn't tell you which one it was; and the model explorer tooltips appear right under the mouse pointer, preventing you from scrolling with the mouse wheel. It also likes to check its "news feed" on a regular basis. It can't seem to do this at the same time as other operations, and if you're in the middle of a particularly complex proof checking operation, it will sometimes suddenly pop up a box suggesting that you cancel your job, so that it can get back to reading the news.

However, it is improving. In the latest versions, when you get a syntax error, it now tells you where in the file the error is. And pressing Delete or Backspace while editing no longer causes it to crash and lose all unsaved data. In general I feel that the TLA Toolbox is quite usable now. If I were designing a new protocol, I would certainly use TLA to help with the design.

TLA does not integrate with any language type systems, so even after you have a specification you still need to check manually that your code matches the spec. It would be nice if you could check this automatically, somehow.

One final problem is that whenever I write a TLA specification, I feel the need to explain first what TLA is. Hopefully it will become more popular and that problem will go away.

Update 2019-01-10: Marek Marczykowski-Górecki told me that the state model for network devices is the same as

the one for block devices, which is documented in the blkif.h block device header file, and provided libvirt debugging help -

so the bug is now fixed!