In this post I describe three approaches to building a language for writing CI/CD pipelines. My first attempt used a monad, but this prevented static analysis of the pipelines. I then tried using an arrow, but found the syntax very difficult to use. Finally, I ended up using a light-weight alternative to arrows that I will refer to here as a dart (I don't know if this has a name already). This allows for static analysis like an arrow, but has a syntax even simpler than a monad.

Table of Contents

- Introduction

- Attempt one: a monad

- Attempt two: an arrow

- Attempt three: a dart

- Comparison with arrows

- Larger examples

- Conclusions

( this post also appeared on Reddit and Lobsters )

Introduction

I was asked to build a system for creating CI/CD pipelines. The initial use for it was to build a CI for testing OCaml projects on GitHub (testing each commit against multiple versions of the OCaml compiler and on multiple operating systems). Here's a simple pipeline that gets the Git commit at the head of a branch, builds it, and then runs the tests:

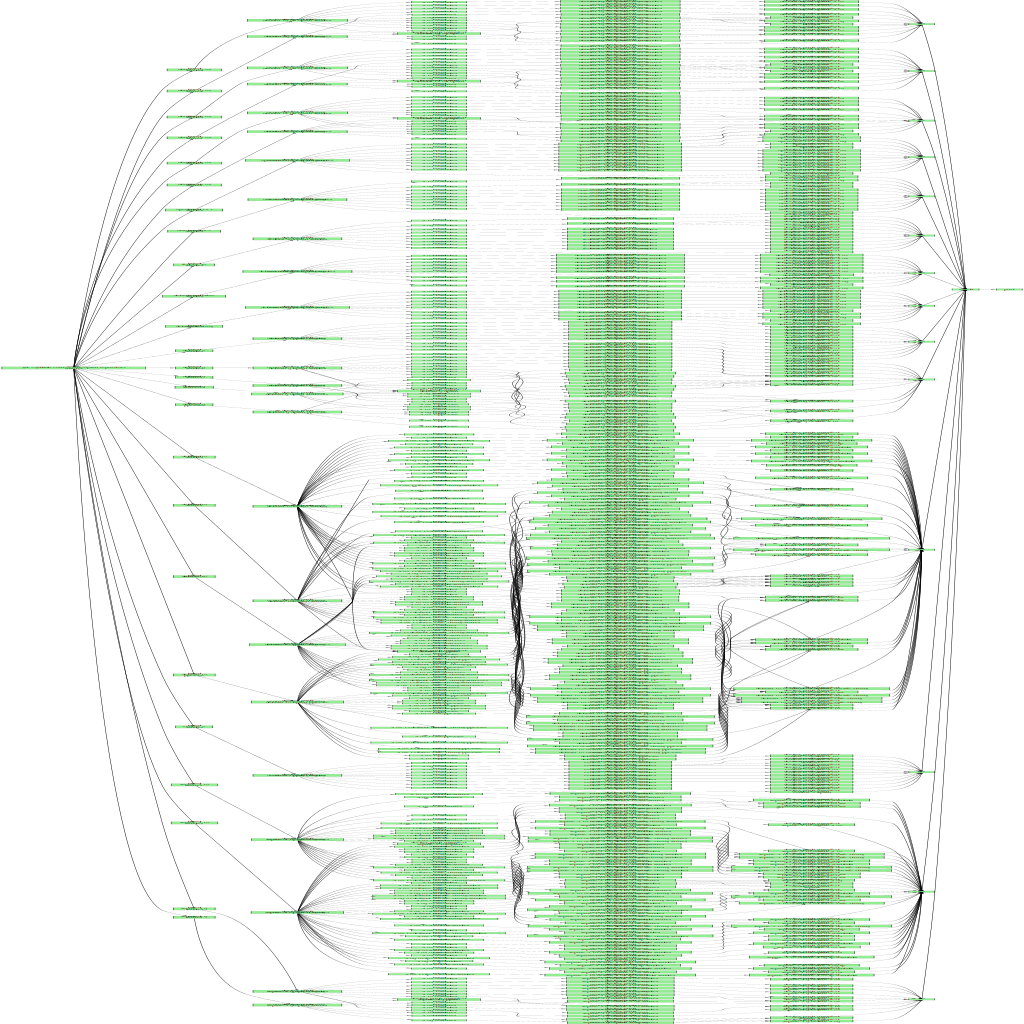

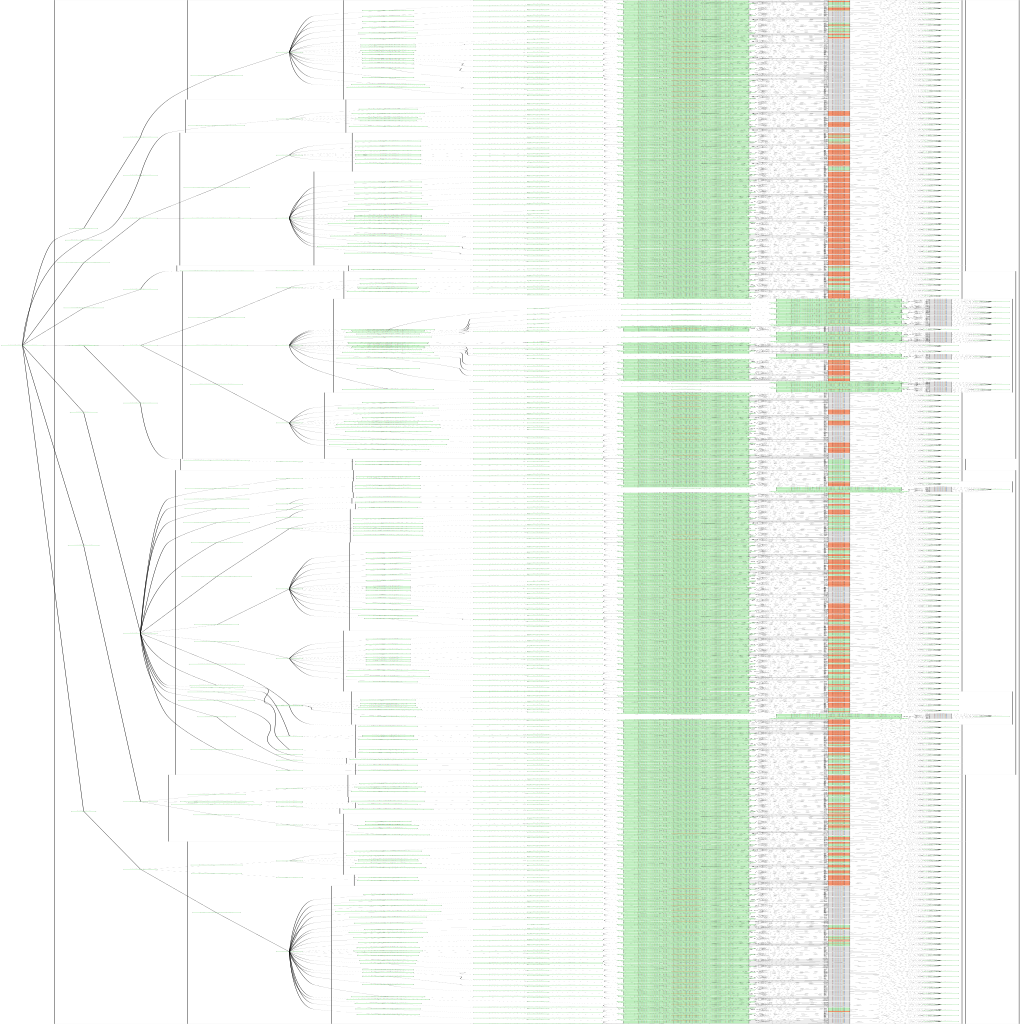

The colour-scheme here is that green boxes are completed, orange ones are in progress and grey means the step can't be started yet.

Here's a slightly more complex example, which also downloads a Docker base image, builds the commit in parallel using two different versions of the OCaml compiler, and then tests the resulting images. Here the red box indicates that this step failed:

A more complex example is testing the project itself and then searching for other projects that depend on it and testing those against the new version too:

Here, the circle means that we should wait for the tests to pass before checking the reverse dependencies.

We could describe these pipelines using YAML or similar, but that would be very limiting. Instead, I decided to use an Embedded Domain Specific Language, so that we can use the host language's features for free (e.g. string manipulation, variables, functions, imports, type-checking, etc).

The most obvious approach is making each box a regular function. Then the first example above could be (here, using OCaml syntax):

1 2 3 4 | |

The second could be:

1 2 3 4 5 6 7 8 9 10 | |

And the third might look something like this:

1 2 3 4 5 6 | |

However, we'd like to add some extras to the language:

- Pipeline steps should run in parallel when possible.

The

example2function above would do the builds one at a time. - Pipeline steps should be recalculated whenever their input changes. e.g. when a new commit is made we need to rebuild.

- The user should be able to view the progress of each step.

- The user should be able to trigger a rebuild for any step.

- We should be able to generate the diagrams automatically from the code, so we can see what the pipeline will do before running it.

- The failure of one step shouldn't stop the whole pipeline.

The exact extras don't matter too much to this blog post, so for simplicity I'll focus on just running steps concurrently.

Attempt one: a monad

Without the extra features, we have functions like this:

1 2 | |

You can read this as "build is a function that takes a source value and returns a (Docker) image".

These functions compose together easily to make a larger function that will fetch a commit and build it:

1 2 3 | |

We could also shorten this to build (fetch c) or to fetch c |> build.

The |> (pipe) operator in OCaml just calls the function on its right with the argument on its left.

To extend these functions to be concurrent, we can make them return promises, e.g.

1 2 | |

But now we can't compose them easily using let (or |>), because the output type of fetch doesn't match the input of build.

However, we can define a similar operation, let* (or >>=) that works with promises. It immediately returns a promise for the final

result, and calls the body of the let* later, when the first promise is fulfilled. Then we have:

1 2 3 | |

In order words, by sprinkling a few * characters around we can turn our plain old pipeline into a new concurrent one!

The rules for when you can compose promise-returning functions using let* are exactly the same as the rules about when

you can compose regular functions using let, so writing programs using promises is just as easy as writing regular programs.

Just using let* doesn't add any concurrency within our pipeline

(it just allows it to execute concurrently with other code).

But we can define extra functions for that, such as all to evaluate every promise in a list at once,

or an and* operator to indicate that two things should run in parallel:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

As well as handling promises,

we could also define a let* for functions that might return errors (the body of the let is called only if the first value

is successful), or for live updates (the body is called each time the input changes), or for all of these things together.

This is the basic idea of a monad.

This actually works pretty well. In 2016, I used this approach to make DataKitCI, which was used initially as the CI system for Docker-for-Mac. Later, Anil Madhavapeddy used it to create opam-repo-ci, which is the CI system for opam-repository, OCaml's main package repository. This checks each new PR to see what packages it adds or modifies, tests each one against multiple OCaml compiler versions and Linux distributions (Debian, Ubuntu, Alpine, CentOS, Fedora and OpenSUSE), and then finds all versions of all packages depending on the changed packages and tests those too.

The main problem with using a monad is that we can't statically analyse the pipeline.

Consider the example2 function above. Until we have queried GitHub to get a commit to

test, we cannot run the function and therefore have no idea what it will do.

Once we have commit we can call example2 commit,

but until the fetch and docker_pull operations complete we cannot evaluate the body of the let* to find out what the pipeline will do next.

In other words, we can only draw diagrams showing the bits of the pipeline that have already

executed or are currently executing, and we must indicate opportunities for concurrency

manually using and*.

Attempt two: an arrow

An arrow makes it possible to analyse pipelines statically. Instead of our monadic functions:

1 2 | |

we can define an arrow type:

1 2 3 4 | |

An ('a, 'b) arrow is a pipeline that takes an input of type 'a and produces a result of type 'b.

If we define type ('a, 'b) arrow = 'a -> 'b promise then this is the same as the monadic version.

However, we can instead make the arrow type abstract and extend it to store whatever static information we require.

For example, we could label the arrows:

1 2 3 4 | |

Here, arrow is a record. f is the old monadic function and label is the "static analysis".

Users can't see the internals of the arrow type, and must build up pipelines using functions provided by the arrow implementation.

There are three basic functions available:

1 2 3 | |

arr takes a pure function and gives the equivalent arrow.

For our promise example, that means the arrow returns a promise that is already fulfilled.

>>> joins two arrows together.

first takes an arrow from 'a to 'b and makes it work on pairs instead.

The first element of the pair will be processed by the given arrow and

the second component is returned unchanged.

We can have these operations automatically create new arrows with appropriate f and label fields.

For example, in a >>> b, the resulting label field could be the string {a.label} >>> {b.label}.

This means that we can display the pipeline without having to run it first,

and we could easily replace label with something more structured if needed.

With this our first example changes from:

1 2 3 4 | |

to

1 2 | |

That seems quite pleasant, although we did have to give up our variable names.

But things start to get complicated with larger examples. For example2, we

need to define a few standard combinators:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Then, example2 changes from:

1 2 3 4 5 6 7 8 9 10 | |

to:

1 2 3 4 5 6 7 8 9 10 | |

We've lost most of the variable names and instead have to use tuples, remembering where our values are.

It's not too bad here with two values,

but it gets very difficult very quickly as more are added and we start nesting tuples.

We also lost the ability to use an optional labelled argument in build ~dockerfile src

and instead need to use a new operation that takes a tuple of the dockerfile and the source.

Imagine that running the tests now requires getting the test cases from the source code.

In the original code, we'd just change test image to test image ~using:src.

In the arrow version, we need to duplicate the source before the build step,

run the build with first build_with_dockerfile,

and make sure the arguments are the right way around for a new test_using.

Attempt three: a dart

I started wondering whether there might be an easier way to achieve the same static analysis that you get with arrows,

but without the point-free syntax, and it seems that there is. Consider the monadic version of example1.

We had:

1 2 3 4 5 6 7 | |

If you didn't know about monads, there is another way you might try to do this.

Instead of using let* to wait for the fetch to complete and then calling build with

the source, you might define build and test to take promises as inputs:

1 2 | |

After all, fetching gives you a source promise and you want an image promise, so this seems very natural.

We could even have example1 take a promise of the commit.

Then it looks like this:

1 2 3 4 | |

That's good, because it's identical to the simple version we started with. The problem is that it is inefficient:

- We call

example1with the promise of the commit (we don't know what it is yet). - Without waiting to find out which commit we're testing, we call

fetch, getting back a promise of some source. - Without waiting to get the source, we call

build, getting a promise of an image. - Without waiting for the build, we call

test, getting a promise of the results.

We return the final promise of the test results immediately, but we haven't done any real work yet. Instead, we've built up a long chain of promises, wasting memory.

However, in this situation what we want is to perform a static analysis. i.e. we want to build up in memory some data structure representing the pipeline... and this is exactly what our "inefficient" use of the monad produces!

To make this useful, we need the primitive operations (such as fetch)

to provide some information (e.g. labels) for the static analysis.

OCaml's let syntax doesn't provide an obvious place for a label,

but I was able to define an operator (let**) that returns a function taking a label argument.

It can be used to build primitive operations like this:

1 2 3 4 | |

So, fetch takes a promise of a commit, does a monadic bind on it to wait for the actual commit and then proceeds as before,

but it labels the bind as a fetch operation.

If fetch took multiple arguments, it could use and* to wait for all of them in parallel.

In theory, the body of the let** in fetch could contain further binds.

In that case, we wouldn't be able to analyse the whole pipeline at the start.

But as long as the primitives wait for all their inputs at the start and don't do any binds internally,

we can discover the whole pipeline statically.

We can choose whether to expose these bind operations to application code or not.

If let* (or let**) is exposed, then applications get to use all the expressive power of monads,

but there will be points where we cannot show the whole pipeline until some promise resolves.

If we hide them, then applications can only make static pipelines.

My approach so far has been to use let* as an escape hatch, so that any required pipeline can be built,

but I later replace any uses of it by more specialised operations. For example, I added:

1

| |

This processes each item in a list that isn't known until runtime.

However, we can still know statically what pipeline we will apply to each item,

even though we don't know what the items themselves are.

list_map could have been implemented using let*, but then we wouldn't be able to see the pipeline statically.

Here are the other two examples, using the dart approach:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Compared to the original, we have an all to combine the results, and there's an extra let+ base = base when calculating the dockerfile.

let+ is just another syntax for map, used here because I chose not to change the signature of make_dockerfile.

Alternatively, we could have make_dockerfile take a promise of the base image and do the map inside it instead.

Because map takes a pure body (make_dockerfile just generates a string; there are no promises or errors) it doesn't need its own box

on the diagrams and we don't lose anything by allowing its use.

1 2 3 4 5 6 7 | |

This shows another custom operation: gate revdeps ~on:ok is a promise that only resolves once both revdeps and ok have resolved.

This prevents it from testing the library's revdeps until the library's own tests have passed,

even though it could do this in parallel if we wanted it to.

Whereas with a monad we have to enable concurrency explicitly where we want it (using and*),

with a dart we have to disable concurrency explicitly where we don't want it (using gate).

I also added a list_iter convenience function,

and gave it a pretty-printer argument so that we can label the cases in the diagrams once the list inputs are known.

Finally, although I said that you can't use let* inside a primitive,

you can still use some other monad (that doesn't generate diagrams).

In fact, in the real system I used a separate let> operator for primitives.

That expects a body using non-diagram-generating promises provided by the underlying promise library,

so you can't use let* (or let>) inside the body of a primitive.

Comparison with arrows

Given a "dart" you can create an arrow interface from it easily by defining e.g.

1

| |

Then arr is just map and f >>> g is just fun x -> g (f x). first can be defined easily too, assuming you have some kind

of function for doing two things in parallel (like our and* above).

So a dart API (even with let* hidden) is still enough to express any pipeline you can express using an arrow API.

The Haskell Arrow tutorial uses an example where an arrow is a stateful function.

For example, there is a total arrow that returns the sum of its input and every previous input it has been called with.

e.g. calling it three times with inputs 1 2 3 produces outputs 1 3 6.

Running a pipeline on a sequence of inputs returns the sequence of outputs.

The tutorial uses total to define a mean1 function like this:

1

| |

So this pipeline duplicates each input number,

replaces the second one with 1,

totals both streams, and then

replaces each pair with its ratio.

Each time you put another number into the pipeline, you get out the average of all values input so far.

The equivalent code using the dart style would be (OCaml uses /. for floating-point division):

1 2 3 4 | |

That seems more readable to me.

We can simplify the code slightly by defining the standard operators let+ (for map) and and+ (for pair):

1 2 3 4 5 6 7 | |

This is not a great example of an arrow anyway, because we don't use the output of one stateful function as the input to another, so this is actually just a plain applicative.

We could easily extend the example pipeline with another stateful function though,

perhaps by adding some smoothing.

That would look like mean1 >>> smooth in the arrow notation,

and values |> mean |> smooth (or smooth (mean values)) in the dart notation.

Note: Haskell does also have an Arrows syntax extension, which allows the Haskell code to be written as:

1 2 3 4 | |

That's more similar to the dart notation.

Larger examples

I've put up a library using a slightly extended version of these ideas at ocurrent/ocurrent.

The lib_term subdirectory is the part relevant to this blog post, with the various combinators described in TERM.

The other directories handle more concrete details, such as integration with the Lwt promise library,

and providing the admin web UI or the Cap'n Proto RPC interface, as well as plugins with primitives

for using Git, GitHub, Docker and Slack.

OCaml Docker base image builder

ocurrent/docker-base-images contains a pipeline that builds Docker base images for OCaml for various Linux distributions, CPU architectures, OCaml compiler versions and configuration options. For example, to test OCaml 4.09 on Debian 10, you can do:

$ docker run --rm -it ocurrent/opam:debian-10-ocaml-4.09

:~$ ocamlopt --version

4.09.0

:~$ opam depext -i utop

[...]

:~$ utop

----+-------------------------------------------------------------+------------------

| Welcome to utop version 2.4.2 (using OCaml version 4.09.0)! |

+-------------------------------------------------------------+

Type #utop_help for help about using utop.

-( 11:50:06 )-< command 0 >-------------------------------------------{ counter: 0 }-

utop #

Here's what the pipeline looks like (click for full-size):

It pulls the latest Git commit of opam-repository each week, then builds base images containing that and the opam package manager for each distribution version, then builds one image for each supported compiler variant. Many of the images are built on multiple architectures (amd64, arm32, arm64 and ppc64) and pushed to a staging area on Docker Hub. Then, the pipeline combines all the hashes to push a multi-arch manifest to Docker Hub. There are also some aliases (e.g. debian means debian-10-ocaml-4.09 at the moment). Finally, if there is any problem then the pipeline sends the error to a Slack channel.

You might wonder whether we really need a pipeline for this, rather than a simple script run from a cron-job. But having a pipeline allows us to see what the pipeline will do before running it, watch the pipeline's progress, restart failed jobs individually, etc, with almost the same code we would have written anyway.

You can read pipeline.ml if you want to see the full pipeline.

OCaml CI

ocurrent/ocaml-ci is an (experimental) GitHub app for testing OCaml projects. The pipeline gets the list of installations of the app, gets the configured repositories for each installation, gets the branches and PRs for each repository, and then tests the head of each one against multiple Linux distributions and OCaml compiler versions. If the project uses ocamlformat, it also checks that the commit is formatted exactly as ocamlformat would do it.

The results are pushed back to GitHub as the commit status, and also recorded in a local index for the web and tty UIs. There's quite a lot of red here mainly because if a project doesn't support a particular version of OCaml then the build is marked as failed and shows up as red in the pipeline, although these failures are filtered out when making the GitHub status report. We probably need a new colour for skipped stages.

Conclusions

It's convenient to write CI/CD pipelines as if they were single-shot scripts that run the steps once, in series, and always succeed, and then with only minor changes have the pipeline run the steps whenever the input changes, in parallel, with logging, error reporting, cancellation and rebuild support.

Using a monad allows any program to be converted easily to have these features, but, as with a regular program, we don't know what the program will do with some data until we run it. In particular, we can only automatically generate diagrams showing steps that have already started.

The traditional way to do static analysis is to use an arrow. This is a little more limited than a monad, because the structure of the pipeline can't change depending on the input data, although we can add limited flexibility such as optional steps or a choice between two branches. However, writing pipelines using arrow notation is difficult because we have to program in a point-free style (without variables).

We can get the same benefits of static analysis by using a monad in an unusual way, here referred to as a "dart". Instead of functions that take plain values and return wrapped values, our functions both take and return wrapped values. This results in a syntax that looks identical to plain programming, but allows static analysis (at the cost of not being able to manipulate the wrapped values directly).

If we hide (or don't use) the monad's let* (bind) function then the pipelines we

create can always be determined statically. If we use a bind, then there will be holes

in the pipeline that may expand to more pipeline stages as the pipeline runs.

Primitive steps can be created by using a single "labelled bind", where the label provides the static analysis for the atomic component.

I haven't seen this pattern used before (or mentioned in the arrow documentation), and it seems to provide exactly the same benefits as arrows with much less difficulty. If this has a proper name, let me know!

This work was funded by OCaml Labs.